ISSN : 2393-8854

Global Journal of Research and Review

Plant Classification

Anusha A R1*, Arpitha M C1, Ashik Hatter S1, Navami B G1, Rashika N1, Dr. Pradeep N2

1Department of CSE, Final year student, BIET College, Davangere, India

2Department of CSE, Associate Professor, BIET College, Davangere, India

- *Corresponding Author:

- Anusha A R

Department of CSE, Final year student, BIET College, Davangere, India

Tel : 9113817729

E-mail: anusharavikumar666@gmail.com

Received Date: July 28, 2021; Accepted Date: October 11, 2021; Published Date: October 21, 2021

Citation: Anusha A R, Arpitha M C, Ashik H S, Navami B G, Rashika N, Pradeep N (2021) Plant Classification, Glob J Res Rev, Vol: 8 No: 7

Abstract

The agricultural sector has recognized that, for crop management to thrive, acquiring relevant information on plants is needed. Weeds have a devastating impact on crop production and yield in general. Current practice uses uniform application of herbicides leading to high costs and degradation of the environment and the field productivity. The identification and classification of weeds are of major technical and economic importance in the agricultural industry. To automate these activities, like in shape, color and texture, a weed control system is feasible. A new Deep learning method proposed in this project identifies the type of crop using CNN. In this project different data augmentation techniques have been used for improving the classification accuracies which has been discussed to increase the performance which will help in improving the validation and training accuracies and characterization of exactness of the CNN model and accomplished various results.

Keywords

CNN; Classification; Deep Learning

Introduction

Machine learning

The name Machine Learning coined in 1959 by Arthur Samuel. & Tom M. Mitchell provided a widely quoted, more formal definition of the algorithms studied in the machine learning field: "A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P if its performance at tasks in P, as measured by P, improves with experience E."

Machine Learning (ML) is the scientific study of algorithms and statistical models that computer systems use to perform a specific task without using explicit instructions, relying on patterns and inference instead. It is seen as a subset of artificial intelligence. Machine learning algorithms build a mathematical model based on sample data, known as "training data", in order to make predictions or decisions without being explicitly programmed to perform the task. Machine learning algorithms are used in a wide variety of applications, such as email filtering and computer vision, where it is difficult or infeasible to develop a conventional algorithm for effectively performing the task [1].

Machine learning is closely related to computational statistics, which focuses on making predictions using computers. The study of mathematical optimization delivers methods, theory and application domains to the field of machine learning. Data mining is a field of study within machine learning, and focuses on exploratory data analysis through unsupervised learning. In its application across business problems, machine learning is also referred to as predictive analytics.

Deep learning

Deep learning is a machine learning technique that is inspired by the way human brain filters information, it is basically learning from examples. It helps a computer model to filter the input data through layers to predict and classify information. Since deep learning processes information in a similar manner as a human brain does, it is mostly used in applications that people generally do. It is the key technology behind driver-less cars, that enables them to recognize a stop sign and to distinguish between a pedestrian and lamp post. Most of the deep learning methods use neural network architectures, so they are often referred to as deep neural networks.

Deep learning is a branch of machine learning which is completely based on artificial neural networks, as neural network is going to mimic the human brain so deep learning is also a kind of mimic of human brain. In deep learning, we don’t need to explicitly program everything. The concept of deep learning is not new. It has been around for a couple of years now. It’s on hype nowadays because earlier we did not have that much processing power and a lot of data. As in the last 20 years, the processing power increases exponentially, deep learning and machine learning came in the picture. A formal definition of deep learning is- neurons [2].

In human brain approximately 100 billion neurons all together this is a picture of an individual neuron and each neuron is connected through thousands of their neighbors. The question here is how we recreate these neurons in a computer. So, we create an artificial structure called an artificial neural net where we have nodes or neurons. We have some neurons for input value and some for output value and in between, there may be lots of neurons interconnected in the hidden layer.

Neural networks

Exploratory data analysis (EDA) is used by data scientists Deep Learning, on the other hand, is just a type of Machine Learning, inspired by the structure of a human brain. Deep learning algorithms attempt to draw similar conclusions as humans would by continually analyzing data with a given logical structure. To achieve this, deep learning uses a multi-layered structure of algorithms called neural networks.

The design of the neural network is based on the structure of the human brain. Just as we use our brains to identify patterns and classify different types of information, neural networks can be taught to perform the same tasks on data.

The individual layers of neural networks can also be thought of as a sort of filter that works from gross to subtle, increasing the likelihood of detecting and outputting a correct result.

The human brain works similarly. Whenever we receive new information, the brain tries to compare it with known objects. The same concept is also used by deep neural networks.

Neural networks enable us to perform many tasks, such as clustering, classification or regression. With neural networks, we can group or sort unlabeled data according to similarities among the samples in this data. Or in the case of classification, we can train the network on a labeled dataset in order to classify the samples in this dataset into different categories.

Proposed work

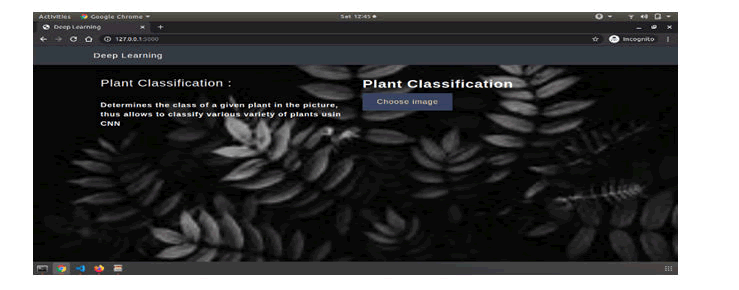

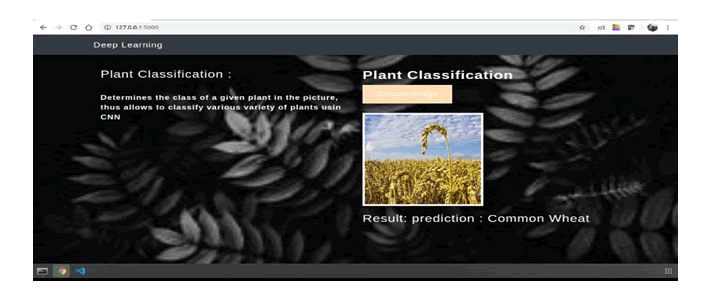

By using this model, we can precisely classify plants.

A convolutional neural network model is built from scratch to extract features from a given plant image and classify it to determine if a image is plant or a weed.

A web is built where the user can upload the plant image and the result is shown on the UI.

Objectives

• To apply preprocessing techniques on image dataset.

• To extract the most prominent features and apply CNN.

• To build a model that can detect the type of the Plant.

• The main objective of this project is to help the farmers to classify the plants and weeds more accurately using a deep learning model.

Dataset

We created our own dataset by taking image samples from the internet.

| Category | Train | Test |

|---|---|---|

| Black-grass | 111 images | 24 images |

| Common wheat | 110 images | 26 images |

| Small flowered cranesbill | 115 images | 28 images |

Algorithm: CNN

Convolutional neural networks (CNN) are one of the most popular models used today. This neural network computational model uses a variation of multilayer perceptrons and contains one or more convolutional layers that can be either entirely connected or pooled. These convolutional layers create feature maps that record a region of image which is ultimately broken into rectangles and sent out for nonlinear processing [3].

Advantages

• Very High accuracy in image recognition problems.

• Automatically detects the important features without any human supervision.

• Weight sharing.

Disadvantages

• CNN do not encode the position and orientation of object.

• Lack of ability to be spatially invariant to the input data.

• Lots of training data is required.

In our project, as per the requirement, we have chosen the following Classification model to obtain appropriate and accurate results: Convolution Neural Network

Different types of Neural Networks are used for different purposes, for example for predicting the sequence of words we use Recurrent Neural Networks more precisely an LSTM, similarly for image classification we use Convolution Neural Network.

Layers used to build ConvNets

A convnets is a sequence of layers, and every layer transforms one volume to another through differentiable functions.

Types of layers

Input Layer

This layer holds the raw input of the image.

Convolution Layer

This layer computes the output volume by computing dot product between all filters and image patches.

Activation Function Layer

This layer will apply element wise activation function to the output of the convolution layer. Some common activation functions are RELU, Sigmoid, Tanh, Leaky RELU, etc. We have used RELU as well as softmax for our model as this is a multi-classification problem.

Pool Layer

This layer is periodically inserted in the convnets and its main function is to reduce the size of volume which makes the computation fast, reduces memory and also prevents from overfitting. Two common types of pooling layers are max pooling and average pooling.

Dense layer

It is the regular deeply connected neural network layer

Accuracy is defined as the ratio of the number of samples correctly classified by the classifier to the total number of samples for a given test data set.

We have used Non-Linearity (ReLU) activation function. ReLU stands for Rectified Linear Unit for a non-linear operation.

The output is ƒ(x) = max (0, x).

ReLUâ??s purpose is to introduce non-linearity in our ConvNet. Since, the real world data would want our ConvNet to learn would be non-negative linear values. We have used the softmax function as this is a multi-class classification problem.

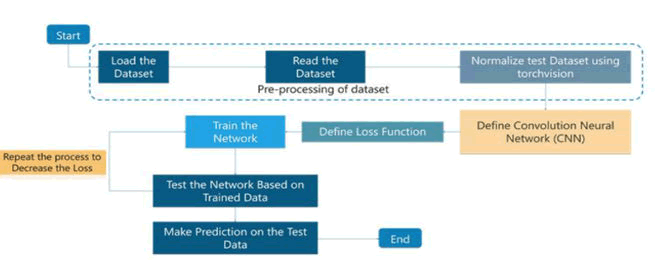

Implementation

In this section, the steps that are performed to get the desired output are explained with the help of flow diagram.

Data Collection

The dataset we have used for this project is We created our own dataset by taking image samples from the internet. Our dataset consist of 414 images which are divided into train and test dataset [4].

Pre-Processing

The dataset is pre-processed to eliminate disturbance and cleaning of data is done through this method. Later the pre-processed data is trained.

Defining Model architecture

For image classification we use Convolution neural network. This is a very crucial step in our deep learning model building process. We have to define how our model will look and that requires

Importing the libraries, Initializing the model, Adding CNN (Convolution Neural Network) Layers, Adding Dense layers

Configure the learning process

With both the training data defined and model defined, it's time configure the learning process. This is accomplished with a call to the compile() method of the Sequential model class.

Train and Test Data

For training a model we initially split the model into 2 sections which are ‘Training data’ and ‘Testing data’. The classifier is trained using ‘training data set’, and then tests the performance of classifier on unseen ‘test data set’.

Train and save the model

At this point we have training data and a fully configured neural network to train with said data. All that is left is to pass the data to the model for the training process to commence, a process which is completed by iterating on the training data. Training begins by calling the fit() method. Your model is to be saved for the future purpose.

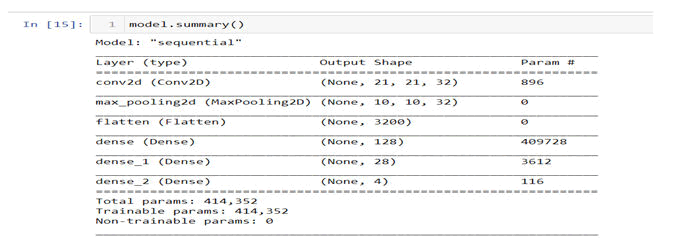

Model

Figure 2: Model summary.

• tf.keras.layers.Conv2D(): The convolution layer which improves image recognition by isolate images features

• tf.keras.layers.MaxPooling2D(): a layer to reduce the information in an image while maintaining features

• tf.keras.layers.Flatten(): flatten the result into 1-dimensional array

• tf.keras.layers.Dense(): add densely connected layer

• Loss: pneumonia detection is using sigmoid activation in the final step, which resulted in either 0 or 1 (normal or pneumonia). Therefore, binary cross entropy is the most suitable loss function

• Metrics: accuracy is the measurement metric to obtain the prediction accuracy rate on every epoch [5].

Result

Conclusion

In the given study, it has been demonstrated that how one can classify the plants and weeds easily from a small dataset of images. This model was basically built from the scratch that helps in separating it from all other existing methods like transfer learning etc. When the neural networks were trained using datasets containing a three species, it performed well to recognice each variety of plants. As the result, the accuracy of the test dataset reached 98.07%, loss is 93.30%, val_loss is 45.13% and val_accuracy is 83.33%which indicates a decent model. Therefore, possible solutions for considering these problems are great area for the research domain.

References

- Hands-On Machine Learning with Scikit-Learn and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems, Aurelien Geron

- Chaki, R. Parekh, and S. Bhattacharya, “Plant leaf classification using multiple descriptors: A hierarchical approach,” J. King Saud Univ. - Comput. Inf. Sci 1–15, 2018.

- M. Prakash, “Detection of Leaf Diseases and Classification using Digital Image Processing,” 2017.

- Kamilaris and F. X. Prenafeta-boldú, “Deep learning in agricultureâ?¯: A survey,” vol. 147, no. July 2017, pp. 70–90, 2018.

- Deep Learning in Computer Vision: Principles and Applications, edited by Mahmoud Hassaballah and Ali Ismail Awad.

Open Access Journals

- Aquaculture & Veterinary Science

- Chemistry & Chemical Sciences

- Clinical Sciences

- Engineering

- General Science

- Genetics & Molecular Biology

- Health Care & Nursing

- Immunology & Microbiology

- Materials Science

- Mathematics & Physics

- Medical Sciences

- Neurology & Psychiatry

- Oncology & Cancer Science

- Pharmaceutical Sciences